Abstract

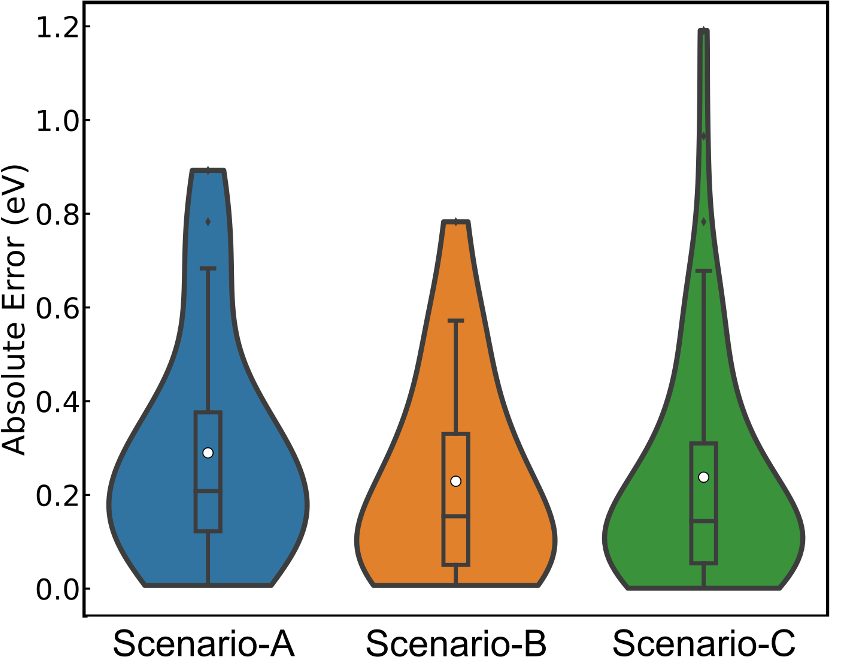

Rate performance of several applications, such as batteries, fuel cells, and electrochemical sensors, is exponentially dependent on the ionic migration barrier (Em) within solids, a difficult-to-estimate quantity. Previous approaches to identify materials with low Em have often relied on imprecise descriptors or rules-of-thumb. Here, we present a graph-neural-network-based architecture that leverages principles of transfer learning to efficiently and accurately predict Em across a variety of materials. We use a model (labeled MPT) that has been simultaneously pre-trained on seven bulk properties, introduce architectural modifications to build inductive bias on different migration pathways in a structure, and subsequently fine-tune (FT) on a manually-curated, literature-derived, first-principles computational dataset of 619 Em values. Importantly, our best-performing FT model (labeled MODEL-3, based on test set scores) demonstrates substantially better accuracy compared to classical machine learning methods, graph models trained from scratch, and a universal machine learned interatomic potential, with a R2 score and a mean absolute error of 0.703 ± 0.109 and 0.261 ± 0.034 eV, respectively, on the test set and is able to classify ‘good’ ionic conductors with an 80% accuracy. Thus, our work demonstrates the effective use of FT strategies and MPT architectural modifications to predict Em, and can be extended to make predictions on other data-scarce material properties.